I have a Arcam rDAC and the difference in sound between USB (presumably async) and optical inputs is far from subtle, the USB is noticeably more bright and optical is more neutral, I came across reports others experienced the same. Sure there can be variance in jitter correction algorithms across the inputs or the lack thereof for some inputs but I'd expect the differences in SQ to be more obscure as the digital streams reaching the DAC chip must be very close regardless of the input path. Why can this happen? rDac is based on a DS chip, perhaps the Arcam decided to use different DSP processing filters depending on the input?

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

SQ differences between DAC inputs, why?

- Thread starter gvl

- Start date

sKiZo

Hates received: 92644 43.20°N 85.50°W

Chipsets ...

Each mode has it's own supporting hardware, and hence the different sound. Each DAC implements the basic chipsets differently as well. Add to that, whatever computer you're using also has differences that will affect what you hear.

Is it fun nyet? <G>

My Maverick is a good example. Some swear by the S/PDIF (optical and coaxial) - I mostly just swear at it. That tells me it's not the DAC itself, but the computer, as all S/PDIF isn't created equal, or even implemented correctly. My HTPC has a supposedly upscale RealTek sound system, so go figure. I find that the USB input runs rings around either optical or coaxial even though it tops out at a lower resolution.

Haven't even tried the HDMI out ... never really felt a need to.

As far as jitter goes, that shouldn't even really be a problem in this day and age if your system is fairly current and set up properly. A lot of what you may think is jitter is just noise and resource competition from all the other crap we like to plug into our computers nowadays. For any Windows based computer, you're doing yourself a BIG favor using WASAPI ... next best thing to bit perfect it is. You need W7 or newer for that ...

PS ... if you're just talking about standalone inputs ... my bad. Same sort of thing holds true though. All depends on how well the DAC integrates with your other components.

Yet another PS ... I notice the Arcam uses a wall wart for power. A lot of these things are bare minimum required to drive the unit. If all else fails, try swapping in a larger, better filtered wart and see if that helps. Just make sure you match the polarity as well as the plug - yours looks to be center hot.

Each mode has it's own supporting hardware, and hence the different sound. Each DAC implements the basic chipsets differently as well. Add to that, whatever computer you're using also has differences that will affect what you hear.

Is it fun nyet? <G>

My Maverick is a good example. Some swear by the S/PDIF (optical and coaxial) - I mostly just swear at it. That tells me it's not the DAC itself, but the computer, as all S/PDIF isn't created equal, or even implemented correctly. My HTPC has a supposedly upscale RealTek sound system, so go figure. I find that the USB input runs rings around either optical or coaxial even though it tops out at a lower resolution.

Haven't even tried the HDMI out ... never really felt a need to.

As far as jitter goes, that shouldn't even really be a problem in this day and age if your system is fairly current and set up properly. A lot of what you may think is jitter is just noise and resource competition from all the other crap we like to plug into our computers nowadays. For any Windows based computer, you're doing yourself a BIG favor using WASAPI ... next best thing to bit perfect it is. You need W7 or newer for that ...

PS ... if you're just talking about standalone inputs ... my bad. Same sort of thing holds true though. All depends on how well the DAC integrates with your other components.

Yet another PS ... I notice the Arcam uses a wall wart for power. A lot of these things are bare minimum required to drive the unit. If all else fails, try swapping in a larger, better filtered wart and see if that helps. Just make sure you match the polarity as well as the plug - yours looks to be center hot.

Last edited:

I'm still on XP with Asio4All for USB and SB Audigy 2 ZS notebook with bit-accurate output set to "ON", fwiw, for optical, so should be close to WASAPI... I thought interface chipsets in DACs should be bit-accurate or close, are you saying they are not? While the USB seemingly sounds "cleaner" I prefer the optical connection in my system even though some think it is inferior to USB and Coax.

sKiZo

Hates received: 92644 43.20°N 85.50°W

Off to google WASPI.

Probably have better luck googling WASAPI ... <G>

Short version, it's the sound handling built into Windows that updated the old boat anchor DirectSound. Uses less resources, has better integration, and bypasses an entire layer of digital BS when doing it's thing. As mentioned earlier, you need at least Windows 7, and there were some major improvements that came along with W8. Pretty sure W10 saw no changes.

Couple tips ...

- Leave your system volume at 100% and use your player software to control what goes out to the DAC if possible. The rDAC doesn't have a volume control, and some player software will use the Windows volume anyway, so maybe not an option, but worth a try. That can clean up a lot of dropouts.

- Increase your buffer in your player software. (google google)

- Try turning off your anti-virus and firewall software (Offline of course). Some of that stuff can eat resources and cause all sorts of audio problems.

- Make sure you're using a decent cable that fits tight. Hopefully the Arcam came with a good one, but not always the case.

- Try disconnecting any other devices you don't really need just to test whether that makes a difference. My wireless keyboard/mouse was throwing all sorts of audio hash at my DAC when I first got going here.

I'm still on XP with Asio4All for USB and SB Audigy 2 ZS notebook with bit-accurate output set to "ON", fwiw, for optical, so should be close to WASAPI... I thought interface chipsets in DACs should be bit-accurate or close, are you saying they are not? While the USB seemingly sounds "cleaner" I prefer the optical connection in my system even though some think it is inferior to USB and Coax.

Details, details, details ... it's all in the details. The chipset used may have perfect specs, but it all depends on how well it's implemented in the DAC's design. Something as simple as going cheap on a capacitor can make or break what you hear. That's where the differences come in anyway.

XP with Asio4All ... (shudder). You really need to look at upgrading. ;-}

At the risk of offending you, I'd say the same about the Audigy card. We're talking what ... 7 year old technology here. That's almost ancient in computer years ... Sound handling has come a LONG way since then.

All that said, if you can try some of the above tweaks and get good results ... good on ya! No sense spending bucks where not needed. Best o' luck with it!

Last edited:

Yes I know, but XP with Asio4All or the Audigy works just fine and I'm happy with the results, I'm mostly streaming Spotify 320kbps anyway so new hardware isn't going to help much. There are no dropouts and the sound is clean, just sonically different depending on the DAC input. Volume is at 100% in both the player and system, in fact the Audigy seems to bypass Windows mixer in "bit-accurate" mode as system volume has no effect on the signal. My findings correlate with several other reports so it seems the differences are more due to the DAC than to my sources. It is just surprising the difference between inputs is so notable in a product from a reputable company.

Last edited:

pete_mac

Super Member

As you say it's all zeroes and ones after all.

Yes, but it's still an analogue representation of those zeros and ones. You can get to a point where quality of the transmission declines and you don't have the square wave that you'd expect..

Different chips can most certainly sound different. I've compared different USB chipsets and different SPDIF chipsets against one another, in the very same DAC, and the difference is not subtle in some cases!

As with all digital audio (IMHO) the clock is king. Whether the receiver chip relies upon a PLL or has a dedicated low phase noise clock (or in many cases, a fairly vanilla clock!) controlling the show, can make a noticeable different the sound.

I think a lot of people grossly simplify digital audio, and think that bits are bits so there can't possibly be any difference. Chips, (good!) circuit design, power supply design, clocks etc all come together to determine the net result.

I find it hard to believe signal carrying Red Book material can significantly degrade over a 3ft cable, unless we are talking about a really bad cable. Sounds like it is the receiving interface chipsets not being bit-accurate as they run some sort of processing algorithm that does more damage than good when the incoming stream is actually pretty good.

gvl: Did you already contact Arcam and ask? Maybe the DAC upsamples by default or operates at the input quantisation and sample rate depending on which type of port is used...

Greetings from Munich!

Manfred / lini

I guess it doesn't hurt to try, though not sure how responsive and technical Arcam is going to be when responding to a question about an end of life product from someone who is not even an original owner.

gvl: Well, for one thing you wouldn't have to mention that you aren't the original owner - and who knows, they might as well be more willing to disclose technical details about a discontnued product than about a current one...

Oh, and btw, did you already try to check, whether the difference is "measurable"? For example, you could generate a frequency sweep (e.g. with Audacity) a let it play back via the two different ports, record the output and have a look at it (for which you could also use Audacity) in order to determine, whether the perceived difference is just audible or significant enough to qualify as measurable difference.

Greetings from Munich!

Manfred / lini

Oh, and btw, did you already try to check, whether the difference is "measurable"? For example, you could generate a frequency sweep (e.g. with Audacity) a let it play back via the two different ports, record the output and have a look at it (for which you could also use Audacity) in order to determine, whether the perceived difference is just audible or significant enough to qualify as measurable difference.

Greetings from Munich!

Manfred / lini

pete_mac

Super Member

I find it hard to believe signal carrying Red Book material can significantly degrade over a 3ft cable, unless we are talking about a really bad cable. Sounds like it is the receiving interface chipsets not being bit-accurate as they run some sort of processing algorithm that does more damage than good when the incoming stream is actually pretty good.

I was a digital cable sceptic but as a result of direct personal experience, I'm now a believer. I've found that even USB cables can sound different. Enter stage stage left the 'Curious Cables USB cabes'... dunno how to explain it, but it's sonically different compared to basic USB cables and also different to a solid 1" USB adaptor. Same goes for SPDIF cables - Canare sounds different to Audioquest solid silver vs Audio Principe vs Black Cat Veloce vs DH Labs. We're not talking enormous differences, but to my ears they are the icing on the cake when it comes to tuning the sound of a system. Anyhow, that's a topic for another thread methinks!

Most of the USB and SPDIF/optical receivers are bit-perfect, but they are certainly not equal - they introduce varying levels of jitter.

ASRC (Asynchronous Sample Rate Converter) chips upstream of the receiver chips are not bit-perfect, as these re-clock and upsample the incoming data before feeding it to the DAC chip. There are some manufacturers who eschew the use of ASRC chips as they like trumpet that that their DACs are truly bit-perfect. ASRCs are intended to attenuate jitter in the incoming data, and most do this to a large degree. However, they are not a silver-bullet for levelling the playing field between different digital inputs - sonic differences still exist and can be clearly heard in most cases.

sKiZo

Hates received: 92644 43.20°N 85.50°W

Not sure where the confusion comes in ... It's not the ones and zeroes that are different ... it's how they're processed. There's a reason for the popularity of "op amp rolling" ... I've done that and am simply amazed with the results. I've done that with a lot of digital circuits here, up to and including the use of discrete components ...

Even something as simple as changing brands can make a world of difference. I replaced all the Malaysian op amps in an old Carver with Texas Instrument "select" equivalents and discovered a whole nuther level I'd been missing. That was also complemented with a new tech chips using adapters that really made it sing.

And ya ... ditto for cables. Swapping out to a mid quality Pangea USB cable took care of some leftover hash from my other changes, complementing the upgraded discrete op amps, regulators, and caps in my DAC.

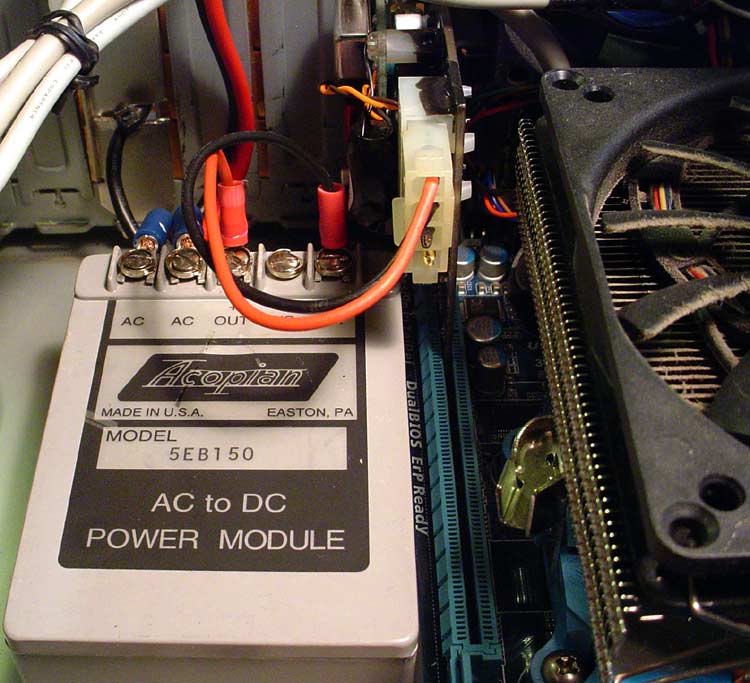

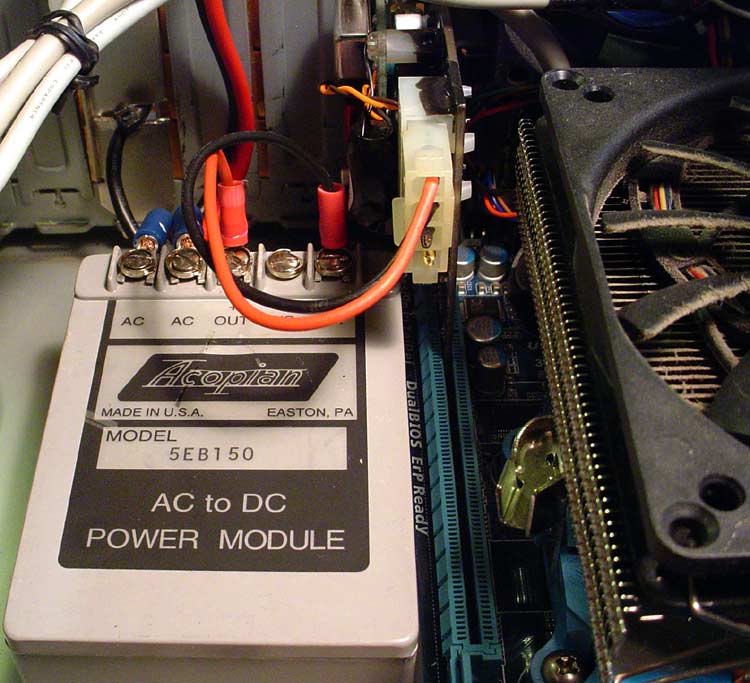

There's a point where improvements become incremental at best, but still worth the effort and expense. If you really want to get crazy, don't forget the other end of the USB cable. Not a big market for "audiophile grade" USB expansion cards, but they're out there. And in there, for that matter, on my rig ...

Only thing plugged into that card is the DAC (and an ADC I use occasionally for ripping vinyl). Next step there was to go with a dedicated power supply for that card alone ...

At this point, I think I've covered the bases ... but I've thought that before. ;-}

Even something as simple as changing brands can make a world of difference. I replaced all the Malaysian op amps in an old Carver with Texas Instrument "select" equivalents and discovered a whole nuther level I'd been missing. That was also complemented with a new tech chips using adapters that really made it sing.

And ya ... ditto for cables. Swapping out to a mid quality Pangea USB cable took care of some leftover hash from my other changes, complementing the upgraded discrete op amps, regulators, and caps in my DAC.

There's a point where improvements become incremental at best, but still worth the effort and expense. If you really want to get crazy, don't forget the other end of the USB cable. Not a big market for "audiophile grade" USB expansion cards, but they're out there. And in there, for that matter, on my rig ...

Only thing plugged into that card is the DAC (and an ADC I use occasionally for ripping vinyl). Next step there was to go with a dedicated power supply for that card alone ...

At this point, I think I've covered the bases ... but I've thought that before. ;-}

ASRC (Asynchronous Sample Rate Converter) chips upstream of the receiver chips are not bit-perfect, as these re-clock and upsample the incoming data before feeding it to the DAC chip. There are some manufacturers who eschew the use of ASRC chips as they like trumpet that that their DACs are truly bit-perfect. ASRCs are intended to attenuate jitter in the incoming data, and most do this to a large degree. However, they are not a silver-bullet for levelling the playing field between different digital inputs - sonic differences still exist and can be clearly heard in most cases.

Are ASRCs commonly found on S/PDIF paths? I seem to remember reading that jitter correction often is only implemented on the USB DAC input, this could explain the differences I'm hearing as USB sounds a bit more "processed".

pete_mac

Super Member

Anyone here familiar with the USB audio transmission? Is there any sort of error-correction built-in into the protocol or S/PDIF for that matter?

USB audio is transmitted via isosynchronous protocol. This is not error-correcting, unlike the bulk and interrupt protocols.

Are ASRCs commonly found on S/PDIF paths? I seem to remember reading that jitter correction often is only implemented on the USB DAC input, this could explain the differences I'm hearing as USB sounds a bit more "processed".

It really depends upon the design of the DAC. Not all DACs feature ASRCs, and not all DACs that do feature ASRCs apply it to the USB input.

sKiZo

Hates received: 92644 43.20°N 85.50°W

... and even if they do, they don't necessarily apply it correctly ... ;-}

Same holds true for S/PDIF ... it IS a standard, but apparently open to fairly loose interpretation. I'm sure Mr. Sony is rolling in his grave thinking on some of the shortcuts and ad libs manufacturers take.

Same holds true for S/PDIF ... it IS a standard, but apparently open to fairly loose interpretation. I'm sure Mr. Sony is rolling in his grave thinking on some of the shortcuts and ad libs manufacturers take.